Entering the Final Phase with AI-Powered Scene Creation

With the arrival of Sprint 3, we’ve entered the final development phase of the SENSO3D project—and it’s already off to a powerful start.

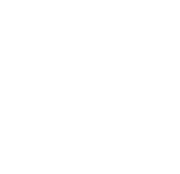

This month, we began testing our highly anticipated Prompt-Based Scene Creation Tool, a revolutionary feature that enables non-technical users to build immersive 3D environments using natural language.

We’re entering the most refined and user-focused sprint so far, with an emphasis on polish, performance, and preparing the platform for real-world applications.

Prompt-Based Tool: From Text to 3D in Seconds

Imagine typing:

“A modern meeting room with a round table, 8 chairs, and a projector screen.” …and seeing it appear as a fully structured, VR-ready 3D scene.

That’s exactly what our Prompt-Based Scene Tool now enables

Built using advanced natural language processing (NLP) and connected to our structured 3D model library, the tool interprets object names, layouts, and even spatial context to generate realistic virtual scenes automatically.

Key Features:

This tool dramatically reduces the complexity of XR scene creation—perfect for designers, educators, marketers, or anyone without 3D modeling expertise.

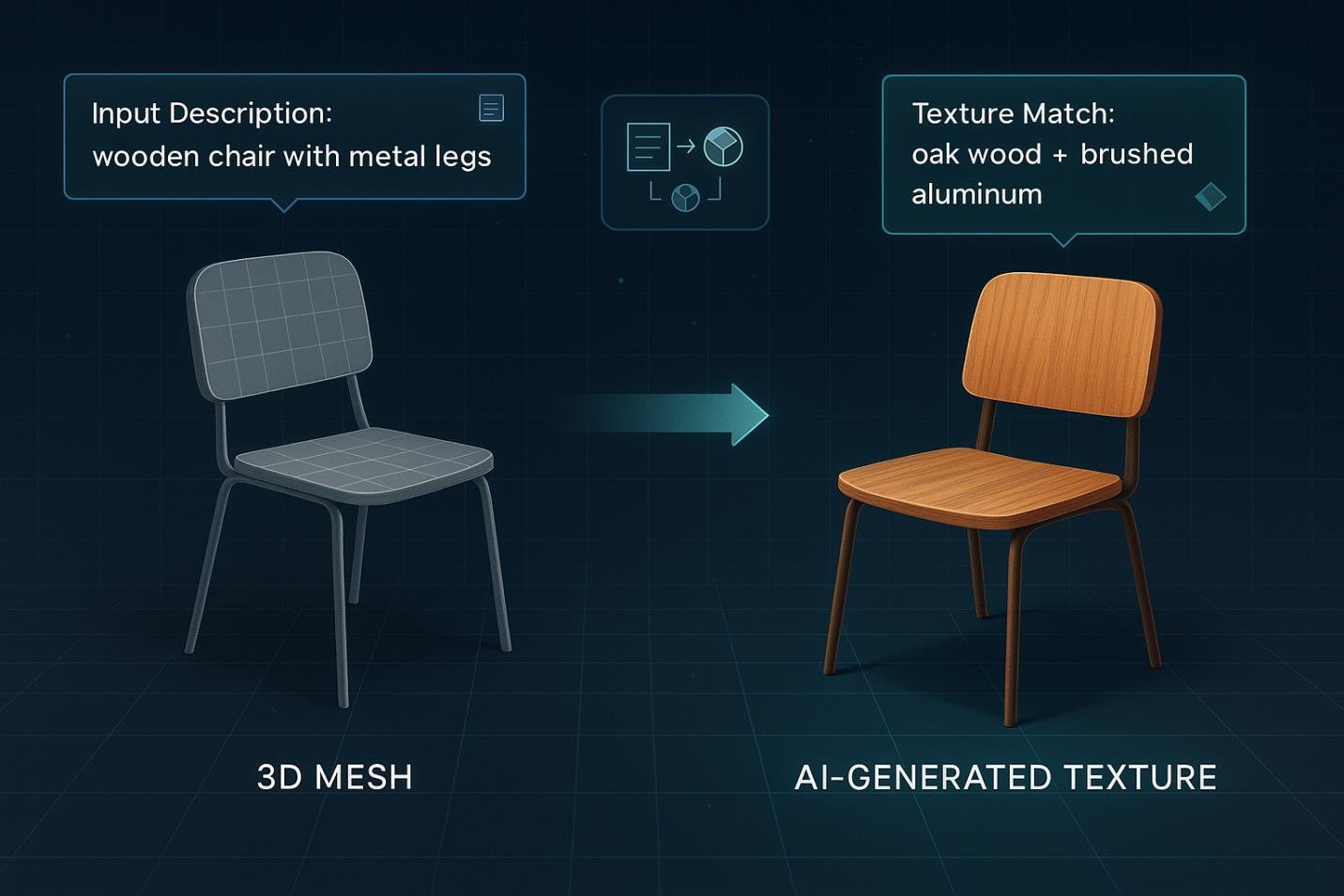

Texture Generation AI in Action

Our team has also kicked off testing of the AI-driven texture generation system, which pairs with our 2D-to-3D modeling pipeline.

Here’s how it works:

- 1The object detection model identifies an object and generates a descriptive profile (e.g., “wooden chair with metal legs”).

- 2

The texture AI model applies a matching material and color palette, simulating realistic textures directly on the mesh.

This means even non-textured objects now appear photo-realistic, significantly enhancing the final visual quality of the scenes.

Optimized Unity Import Pipeline

We’ve further refined our Unity import automation pipeline, focusing on:

Assets imported via this pipeline are VR-ready, fully integrated with collision detection, lighting presets, and real-time interactions.

Collecting User Feedback

This month also saw the start of internal user testing across several pilot use cases, including:

We’re actively gathering feedback to:

Initial reactions are overwhelmingly positive, especially among non-technical testers, proving our goal of democratizing 3D content creation is working.

What’s Coming Next

As we head into February, our goals include:

We’re closer than ever to delivering a platform that bridges AI, creativity, and XR—and the final stretch is looking strong.