SENSO3D Enters a New Phase of Complexity and Capability

November has been an intense month of scaling and sophistication for the SENSO3D project. As we approach the conclusion of Sprint 2, we’ve successfully expanded the functionality and complexity of both our AI engine and Unity-based immersive environments.

From improving model accuracy to building smarter 3D workflows, we’re taking major strides toward delivering an intelligent and automated 3D scene generation system.

AI Can Now Recognize Over 50 Object Categories

One of the standout achievements this month is the expansion of our AI objectclassification system. Our detection models now identify and classify 50+ object types,enabling scene construction with far greater detail and diversity

These new object categories include:

This expansion is crucial for automated scene population, and each recognized objectis now paired with comprehensive metadata tagging for better categorization, filtering,and placement

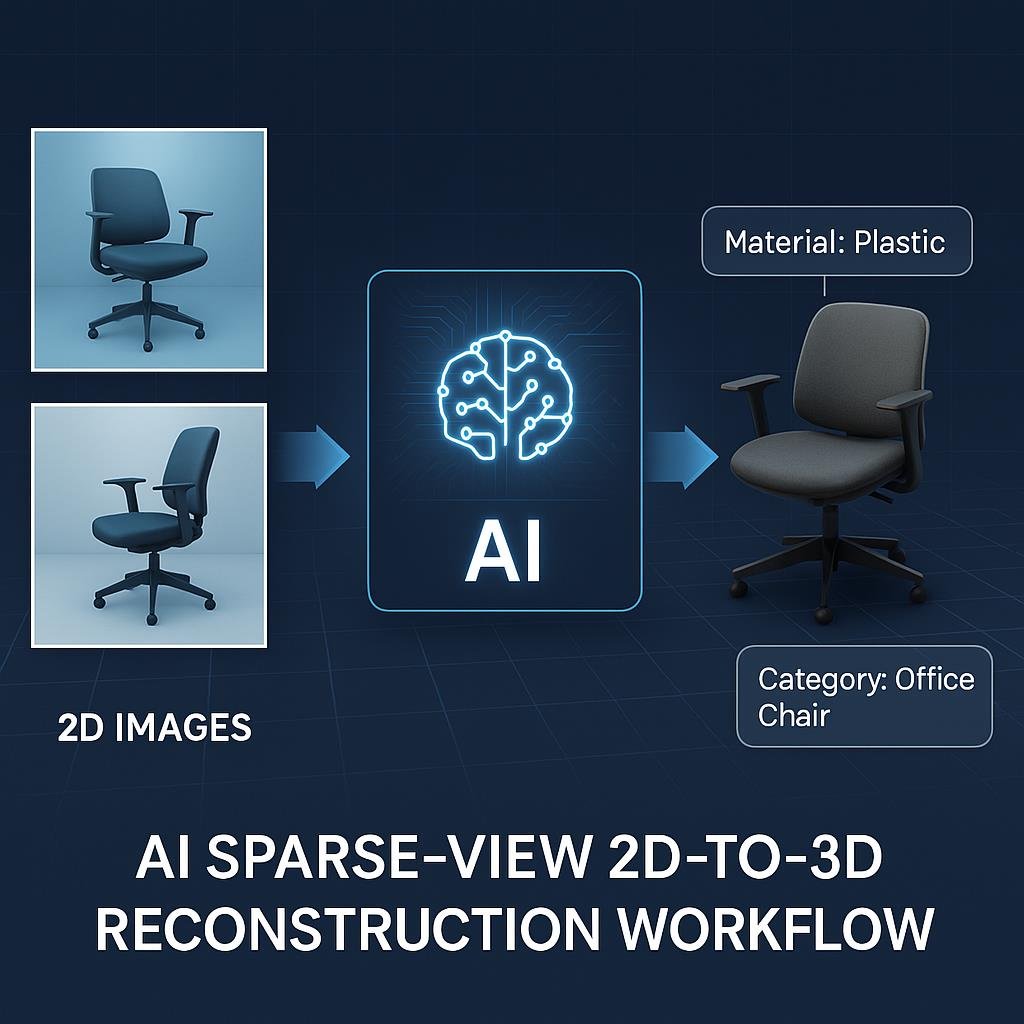

Sparse-View Reconstruction: A Breakthrough in AI Efficiency

We’ve launched a new sparse-view reconstruction pipeline, designed to build realistic 3D models from as few as one or two 2D images. This is a major leap toward usability for non-technical users.

Key benefits include:

This AI innovation complements our previous work with structured-light scanning, giving users more options for 3D content generation.

Scene Complexity Grows in Unity

The immersive environments being built in Unity have grown significantly more dynamic. November’s updates include:

Modular Lighting System

This automation reduces manual work and ensures a consistent pipeline for importing AI-generated and scanned 3D models.

Scene Navigation

VR Object Interaction

Each update enhances user immersion and interactivity, making our environments suitable for a wide range of use cases, from virtual training to remote collaboration and product demos.

Preparing for Sprint 2 Completion

As we move into December, we’re preparing to wrap up Sprint 2 with:

We’re also preparing our first demo video showing real-time interaction within the Unity scenes—stay tuned!

The Vision is Taking Shape

Each line of code, each scanned object, and each AI training cycle is bringing us closer to a world where 3D content creation is smart, fast, and accessible to everyone—not just experts

Thanks for following along as SENSO3D evolves into a full-featured immersive tech platform